Artificial intelligence from a union perspective

Recently, I had a conversation with a union representative, and she was saying that there is still a lot of uncertainty about how to approach artificial intelligence. Just look at what workers’ councils are saying about the use of private ChatGPT accounts for work. Some support it, while others oppose it. There are good arguments for both sides.

Unions are interesting organizations. Historically, they have been very successful when you think about working conditions, social security or democratic and political rights. Even today, they have 5.6 million members in Germany or 1.2 million members in Austria.

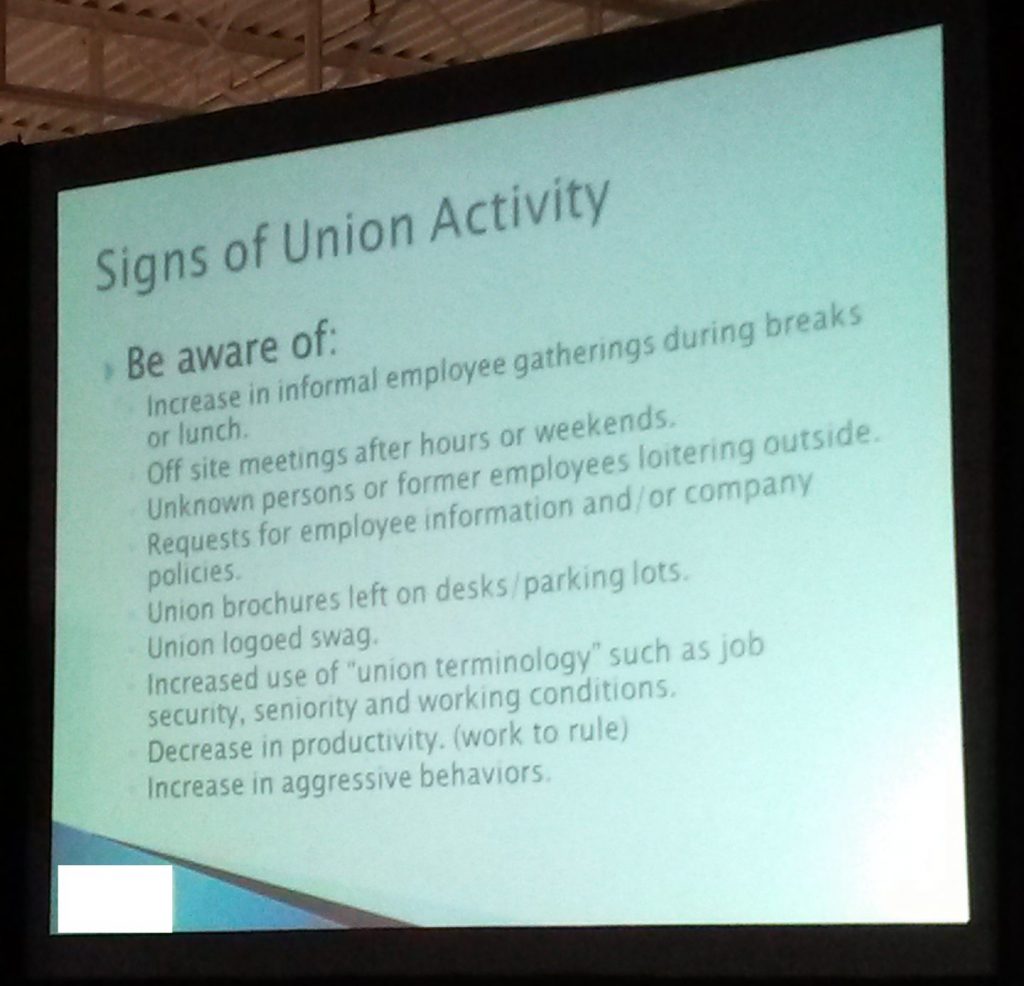

Conversely, many companies will try to reduce the influence of unions. A few years ago, I was at a security conference and trade show in Toronto and one of the sessions was about strategies to combat unionization. The image below shows what you should be looking for.

Let us look at some of the examples why unions are often disliked by the management of companies. The examples include Hollywood, New York fashion shows and media as well as Las Vegas entertainment.

Let us start with Las Vegas. Las Vegas unions are considered to be among the most powerful unions in the US. The background of the situation in Las Vegas is that the hospitality sector is expected to profit from technologies such as self check-in stations, automated valet ticket services and robot bartenders known as “tipsy robots.”

The Culinary Workers Union in Las Vegas, which represents tens of thousands of hotel and casino employees, has negotiated an agreement with a strong focus on the regulation of new technologies or technology protection.

A few parts of the agreement are noteworthy:

- Safety Net: If a job is eliminated due to technology, the employee receives a severance payment of $2,000 per year of service and the right to preferential placement in other open positions within the company. That is a lot of money.

- Advance Warning: Employers must notify the union in advance before introducing new technologies.

The contract has a duration of 5 years and makes staff reductions expensive and unattractive for casinos, thereby encouraging the preservation of human labor. In general, it seems that the hospitality sector in Las Vegas can be well defined and has a lack of workers. That explains the strength of the unions. It seems unlikely that similar agreements are probably for small-scale restaurants or hotels across countries.

Let us now move to the model industry. A few years ago, I shared a panel with the founder of the Model Alliance (basically a union of models) and kept following their work. Models are usually contractors with very few rights which mean that they need to pursue change through legislation.

A law blog has summarized one of the key problems related to artificial intelligence as “control over image and likeness is a model’s currency”. Some models have already found that their images were signed away after doing a body scan. That means that some companies created digital replicas which can be sold. Similar discussions can also be found in Hollywood.

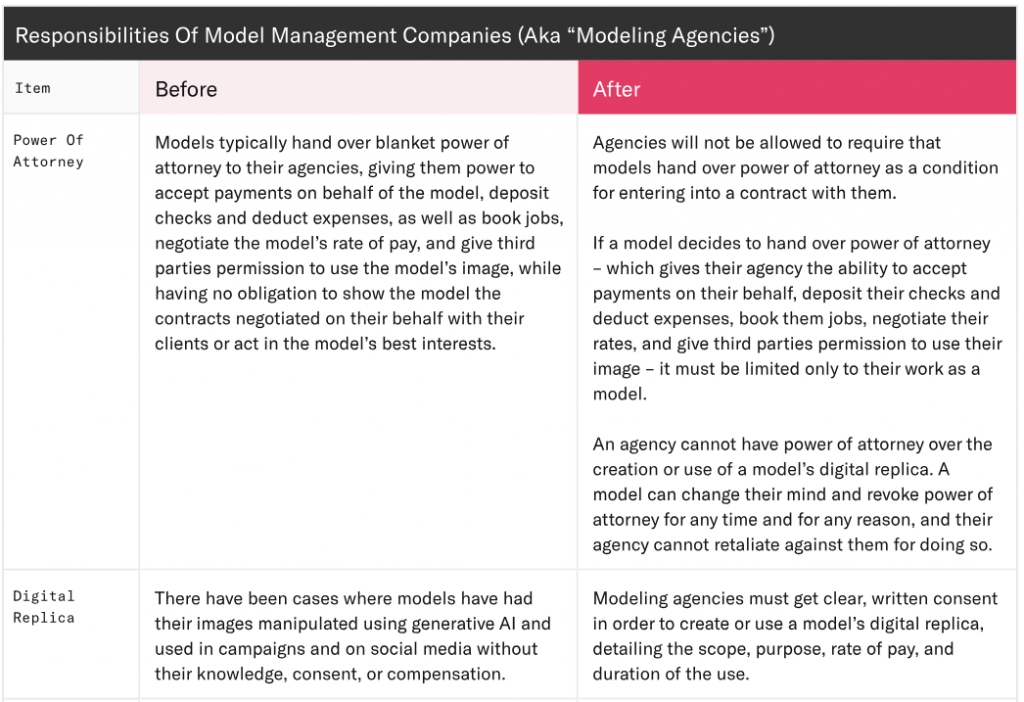

The „New York State Fashion Workers Act“ is in effect since June 2025 and two aspects are noteworthy:

- Digital Replicas: The law prohibits management agencies from signing contracts that include the right to create digital replicas without separate, written consent. This consent must specify the scope, purpose, compensation, and duration in detail.

- Prohibition of AI Manipulation: It is illegal to alter or manipulate a model’s digital likeness using AI without explicit consent.

The changes before and after the act are also shown in the screenshot below.

Let us now move to the news industry. In 2024, the Ziff Davis Creators Guild (part of the NewsGuild of New York) concluded an agreement for employees of PCMag, Mashable, and Lifehacker. The agreement included the following aspects.

- No impersonation: Similar to the points above, it is prohibited to impersonate a specific journalist or team using generative AI without their consent.

- Protection Against Dismissal: The contract includes an explicit ban on layoffs justified by the introduction of generative AI. The contract has a duration of 3 years.

- Editorial Integrity: It was established that AI content must always be subject to human review. Furthermore, AI-generated content must be transparently labeled for the reader.

This is quite interesting and similar to the points above. It protects the jobs and introduces guidelines on the use of generative AI.

Hollywood is another good example. Hollywood studios can use generative AI to produce videos, generate voices, write scripts and create music. Obviously, there is a lot of conflict potential in this case. The industrial action of the US screenwriters (WGA) and actors (SAG-AFTRA) in 2023 was the first major industrial conflict of the AI era. You might remembers that some late night shows were not running during this time.

For example, studios planned to scan background actors once, pay them for a single day, and then use their „Digital Replica“ indefinitely in films. That would happen without further compensation or consent. For writers, the danger was that AI models would write or rewrite scripts, degrading authors to mere editors.

Let us discuss the agreements for the actors and the writers.

SAG-AFTRA is the abbreviation of Screen Actors Guild – American Federation of Television and Radio Artists and is the major U.S. labor union for approximately 160,000 media professionals.

The Guardian reports the following:

The union also won hard-fought guardrails for the use of AI. Under the new agreement, studios cannot create a digital replica of an actor without first obtaining their consent, and actors will receive payment based on the type of work the digital replica performs on-screen. The contract provides protections for background performers so that their digital replicas cannot be used without consent.

It is quite interesting to note that background actors receive payments for the work their digital twins are doing.

The Writers Guild of America (WGA) is a labor union representing writers in film, television, news, and online media. The summary of the agreement is interesting to read:

- AI can’t write or rewrite literary material, and AI-generated material will not be considered source material under the [agreement], meaning that AI-generated material can’t be used to undermine a writer’s credit or separated rights.

- A writer can choose to use AI when performing writing services, if the company consents and provided that the writer follows applicable company policies, but the company can’t require the writer to use AI software (e.g., ChatGPT) when performing writing services.

- The Company must disclose to the writer if any materials given to the writer have been generated by AI or incorporate AI-generated material.

- The WGA reserves the right to assert that exploitation of writers’ material to train AI is prohibited by MBA or other law.

What can we learn from the examples in New York, Las Vegas and Los Angeles.

- Digital replica: It is easy to imagine that you create a digital replica of an employee. Just monitor every digital step and you will have a good idea how to automate the workflows. That will surely be an area where employees will have additional rights which can be built upon existing protection against surveillance technologies.

- Technology protection: Employees in Las Vegas receive multiples of $2,000 if they lose their job due to automation. That seems rather unlikely to happen across industries and countries.

- Upskilling and reskilling: A key emphasis in union position papers is put on reskilling and upskilling. That seems to be a key approach to addressing artificial intelligence from a union perspective and is line with public policies.

- Transparency: It is already practice in many contexts that current and potential employees understand how algorithms work. It also seems to be a sensible position that if a system is too opaque to be explained, it should not be deployed in the workplace.

- Liability: There should also be a discussion around the liability of the use of chatbots. It is not entirely clear if employees are liable if they use a private ChatGPT account which leads to errors in their work.